I recently had a professor watch me handwrite an em dash in class and tell me they had never seen someone use one without ChatGPT telling them to. I was taken aback since he had just seen me put my pen to paper, with no other devices out.

In the last few years since generative AI became a mainstream tool in academia, human jargon has steadily begun to change. Words like “delve,” “realm” and “meticulous” have worked their way out of plagiarist territory and into our normal, everyday dialogue.

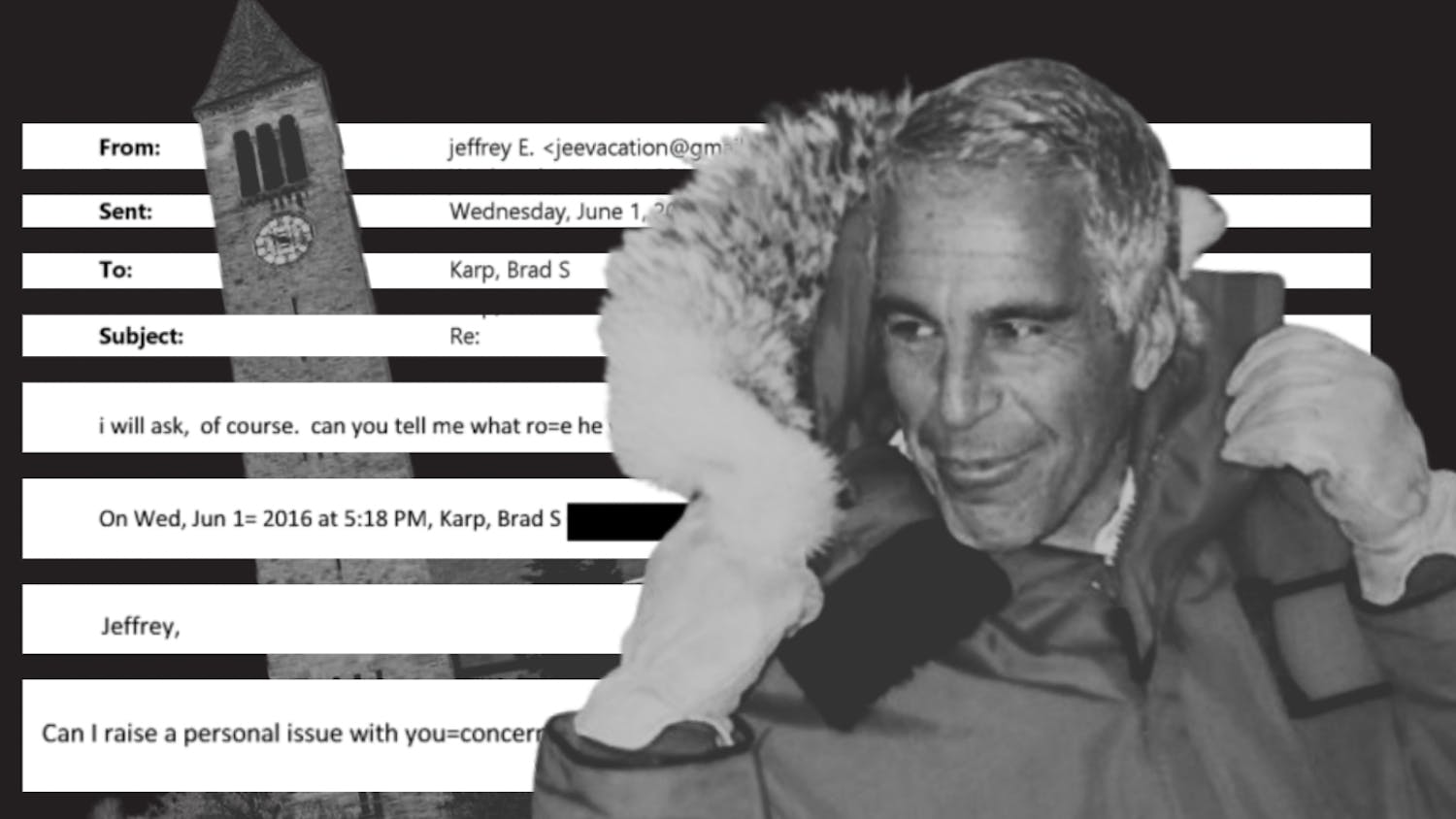

As an academic collective, the American university has struggled to find a workable solution to the problem of detecting generative AI-use in classrooms. Certainly Cornell does not know what to do with this issue; at least 5 different panels were held across departments in the last month to discuss just this issue. In fact, Cornell has put together an entire committee to try and solve the problem of how to move forward.

For now, it would seem that we are faced with the seemingly insurmountable task: How do we avoid sounding like a chatbot?

There is a case to be made that we should want to sound like these chatbots; presumably they have read a lot more than us and can formulate a seemingly intelligent and coherent thought faster than we blink. Would it really be terrible to sound so knowledgeable and capable? Isn't this what should happen to us anyways after having completed our undergraduate at an elite, Ivy-league institution? Surely by the time we leave we should sound more or less intelligent, so why not speed up the process?

But wouldn't it be nice to always sound like yourself? Wouldn’t it be nice to know the ideas on the page are actually yours, shaped by the readings you did, the time you spent trying to learn something you didn’t previously know and the thinking you actually engaged in?

My case against sounding like a chatbot is simple: I would like to claim that I can pass the Turing test. This is evidently becoming harder and harder to claim as a human. Soon, we may need an anti-Turing test, where humans are proving to themselves that they are explicitly not chatbots. Ultimately, the problem with sounding more and more like a generative AI model is not inherently that it is AI, but that it is decidedly unoriginal.

Originality is, in many ways, what allows us to uphold and maintain interpersonal relationships. Forget your class essay — wouldn't you like to know what to do when a friend needs something only you can help them with? What if a loved one needs comfort at a time of distress? Once we start turning to these AI models in order to both ask and answer these questions, we are no longer talking to each other, but through each other.

Perhaps, then, the question of AI should not only be addressed as an issue that is starkly prominent only in classrooms, but also as one that is pressing to take up with ourselves as agents of thought.

While we are not yet living among AI in the way that someone like Philip K. Dick imagined it in Do Androids Dream of Electric Sheep, we are perhaps living in an even stranger stratosphere where AI operates through us, where we are not fully agents of self but instead tools of expression for generative models to exist outside of a cyberspace.

So when you are writing a paper with ChatGPT or using it to respond to a professor's emails or a friend's texts, consider that they might also be using it to write back. Who are you really talking to?

Nina Davis '26 is an opinion columnist in the College of Arts & Sciences. She served in the 142nd Editorial Board as photography editor. Her column Making Meaning is interested in asking questions that do not have easy answers. She can be reached at ndavis@cornellsun.com.